Since the beginning of the project we’ve spoken about variables on multiple levels. Of course flowbits defined by the Snort language came first, but other flow based variables quickly followed: flowints for basic counting, and vars for extracting data using pcre expressions.

I’ve always thought of the pcre data extraction using substring capture as a potentially powerful feature. However the implementation was lacking. The extracted data couldn’t really be used for much.

Internals

Recently I’ve started work to address this. The first thing that needed to be done was to move the mapping between variable names, such as flowbit names, and the internal id’s out of the detection engine. The detection engine is not available in the logging engines and logging of the variables was one of my goals.

This is a bit tricky as we want a lock less data structure to avoid runtime slow downs. However rule reloads need to be able to update it. The solution I’ve created has a read only look up structure after initialization that is ‘hot swapped’ with the new data at reload.

PCRE

The second part of the work is to allow for more flexible substring capture. There are 2 limitations in the current code: first, only single substring can be captured per rule. Second, the names of the variables were limited by libpcre. 32 chars with hardly any special chars like dots. The way to express these names has been a bit of a struggle.

The old way looks like this:

pcre:"/(?P.*)<somename>/";

This create a flow based variable named ‘somename’ that is filled by this pcre expression. The ‘flow_’ prefix can be replaced by ‘pkt_’ to create a packet based variable.

In the new method the names are no longer inside the regular expression, but they come after the options:

pcre:"/([a-z]+)\/[a-z]+\/(.+)\/(.+)\/changelog$/GUR, \

flow:ua/ubuntu/repo,flow:ua/ubuntu/pkg/base, \

flow:ua/ubuntu/pkg/version";

After the regular pcre regex and options, a comma separated lists of variable names. The prefix here is ‘flow:’ or ‘pkt:’ and the names can contain special characters now. The names map to the capturing substring expressions in order.

Key/Value

While developing this a logical next step became extraction of key/value pairs. One capture would be the key, the second the value. The notation is similar to the last:

pcre:"^/([A-Z]+) (.*)\r\n/G, pkt:key,pkt:value";

‘key’ and ‘value’ are simply hardcoded names to trigger the key/value extraction.

Logging

Things start to get interesting when logging is added. First, by logging flowbits existing rulesets can benefit.

{

"timestamp": "2009-11-24T06:53:35.727353+0100",

"flow_id": 1192299914258951,

"event_type": "alert",

"src_ip": "69.49.226.198",

"src_port": 80,

"dest_ip": "192.168.1.48",

"dest_port": 1077,

"proto": "TCP",

"tx_id": 0,

"alert": {

"action": "allowed",

"gid": 1,

"signature_id": 2018959,

"rev": 2,

"signature": "ET POLICY PE EXE or DLL Windows file download HTTP",

"category": "Potential Corporate Privacy Violation",

"severity": 1

},

"http": {

"hostname": "69.49.226.198",

"url": "/x.exe",

"http_user_agent": "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)",

"http_content_type": "application/octet-stream",

"http_method": "GET",

"protocol": "HTTP/1.1",

"status": 200,

"length": 23040

},

"vars": {

"flowbits": {

"exe.no.referer": true,

"http.dottedquadhost": true,

"ET.http.binary": true

}

}

}

When rules are created to extract info and set specific ‘information’ flowbits, logging can create value:

"vars": {

"flowbits": {

"port/http": true,

"ua/os/windows": true,

"ua/tool/msie": true

},

"flowvars": {

"ua/tool/msie/version": "6.0",

"ua/os/windows/version": "5.1"

}

}

"http": {

"hostname": "db.local.clamav.net",

"url": "/daily-15405.cdiff",

"http_user_agent": "ClamAV/0.97.5 (OS: linux-gnu, ARCH: x86_64, CPU: x86_64)",

"http_content_type": "application/octet-stream",

"http_method": "GET",

"protocol": "HTTP/1.0",

"status": 200,

"length": 1688

},

"vars": {

"flowbits": {

"port/http": true,

"ua/os/linux": true,

"ua/arch/x64": true,

"ua/tool/clamav": true

},

"flowvars": {

"ua/tool/clamav/version": "0.97.5"

}

}

In the current code the alert and http logs are showing the ‘vars’.

Next to this, a ‘eve.vars’ log is added, which is a specific output of vars independent of other logs.

Use cases

Some of the use cases could be to add more information to logs without having to add code. For example, I have a set of rules that set of rules that extracts the packages are installed by apt-get or for which Ubuntu’s updater gets change logs:

"vars": {

"flowbits": {

"port/http": true,

"ua/tech/python/urllib": true

},

"flowvars": {

"ua/tech/python/urllib/version": "2.7",

"ua/ubuntu/repo": "main",

"ua/ubuntu/pkg/base": "libxml2",

"ua/ubuntu/pkg/version": "libxml2_2.7.8.dfsg-5.1ubuntu4.2"

}

}

It could even be used as a simple way to ‘parse’ protocols and create logging for them.

Performance

Using rules to extract data from traffic is not going to be cheap for 2 reasons. First, Suricata’s performance mostly comes from avoiding inspecting rules. It has a lot of tricks to make sure as little rules as possible are evaluated. Likewise, the rule writers work hard to make sure their rules are only evaluated if they have a good chance of matching.

The rules that extract data from user agents or URI’s are going to be matching very often. So even if the rules are written to be efficient they will still be evaluated a lot.

Secondly, extraction currently can be done through PCRE and through Lua scripts. Neither of which are very fast.

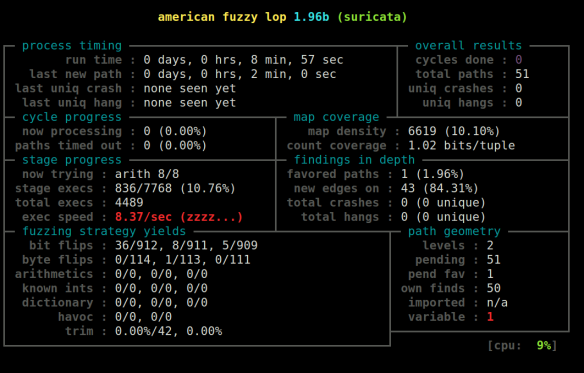

Testing the code

Check out this branch https://github.com/inliniac/suricata/pull/2468 or it’s replacements.

Bonus: unix socket hostbits

Now that variable names can exist outside of the detection engine, it’s also possible to add unix socket commands that modify them. I created this for ‘hostbits’. The idea here is to simply use hostbits to implement white/blacklists. A set of unix socket commands will be added to manage add/remove them. The existing hostbits implementation handles expiration and matching.

To block on the blacklist:

drop ip any any -> any any (hostbits:isset,blacklist; sid:1;)

To pass all traffic on the whitelist:

pass ip any any -> any any (hostbits:isset,whitelist; sid:2;)

Both rules are ‘ip-only’ compatible, so will be efficient.

A major advantage of this approach is that the black/whitelists can be

modified from ruleset themselves, just like any hostbit.

E.g.:

alert tcp any any -> any any (content:"EVIL"; \

hostbits:set,blacklist; sid:3;)

A new ‘list’ can be created this way by simply creating a rule that

references a hostbit name.

Unix Commands

Unix socket commands to add and remove hostbits need to be added.

Add:

suricatasc -c "add-hostbit <ip> <hostbit> <expire>"

suricatasc -c "add-hostbit 1.2.3.4 blacklist 3600"

If an hostbit is added for an existing hostbit, it’s expiry timer is updated.

Hostbits expire after the expiration timer passes. They can also be manually removed.

Remove:

suricatasc -c "remove-hostbit <ip> <hostbit>"

suricatasc -c "remove-hostbit 1.2.3.4 blacklist"

Feedback & Future work

I’m looking forward to getting some feedback on a couple of things:

- log output structure and logic. The output needs to be parseable by things like ELK, Splunk and jq.

- pcre options notation

- general feedback about how it runs

Some things I’ll probably add:

- storing extracted data into hosts, ippairs

- more logging

Some other ideas:

- extraction using a dedicated keyword, so outside of pcre

- ‘int’ extraction

Let me know what you think!

Today, almost 2 years after the release of Suricata 2.0, we released 3.0! This new version of Suricata improves performance, scalability, accuracy and general robustness. Next to this, it brings a lot of new features.

Today, almost 2 years after the release of Suricata 2.0, we released 3.0! This new version of Suricata improves performance, scalability, accuracy and general robustness. Next to this, it brings a lot of new features. As the team is back from a very successful week in Barcelona, I’d like to take a moment on what we discussed and decided on with regards to development.

As the team is back from a very successful week in Barcelona, I’d like to take a moment on what we discussed and decided on with regards to development. Debian ‘backports’ repository. This allows users of Debian stable to run up to date versions of Suricata.

Debian ‘backports’ repository. This allows users of Debian stable to run up to date versions of Suricata.

You must be logged in to post a comment.